AI Evaluation,

Simplified.

Trade guesswork for evidence. elluminate aligns experts and builders around measurable standards—catching failures early, protecting what works, and accelerating the path from proof of concept to production.

Trusted by AI teams at leading companies

The Decision Layer for Reliable AI

elluminate is the collaborative evaluation platform for teams who need to build, launch, and scale AI products with unshakable confidence.

Transform Your AI Development

Turn Domain Expertise into Your Standard for Quality

Align on what "good" means. Give engineers, designers and product leaders a shared definition of quality for your specific use cases.

Replace Guesswork with Rigorous Measurement

Move beyond vibes and anecdotes. Systematically measure your AI's performance to pinpoint where it excels, where it falls short and — most importantly — why.

Streamline the Path from Proof of Concept to Production

Ship with confidence. Establish a repeatable workflow that elevates promising prototypes into safe, compliant and production-ready solutions.

Deliver Results with Certainty

Innovate Faster without Breaking What Works

Move faster, safer. Accelerate your development speed with confidence, knowing you can improve your AI solutions without introducing new risks or regressions.

Gain Accountability You Can Verify

Justify every decision. Build a verifiable narrative for each of your choices made, giving you the evidence needed to win over leadership, auditors and customers.

Empower Your Team to Build Better AI

Unleash your team's talent. Eliminate wasted time on manual testing and spreadsheets so your best talent can focus more on building a better product.

Evidence-Driven Evaluation Workflow

Run experiments, track progress and trust every decision — so you can ship reliable AI with confidence.

Run an Experiment

Your chatbot works perfectly during testing, but then embarrasses you with customers. Sound familiar? Running experiments with elluminate changes that. Test real customer queries with your system and instantly see where it struggles: 100% format compliance (great!), but only 83% of question were answered correctly (problematic!).

Analyzing each failed case with insights into what is going wrong is just one click away. Inspecting the token usage exposes that you are burning money on verbose responses when concise ones would do. Reviewing the response time distribution reveals that 5% of queries are taking unacceptably long.

Gone are the "it seems to work fine" deployments. No more discovering issues from angry customer tickets. Before shipping, you already know your success rate on your specific use cases and edge cases. You measure instead of guessing. You prove instead of hoping. Every release is no longer a gamble, but a confident step forward.

Run an Experiment

Your chatbot works perfectly during testing, but then embarrasses you with customers. Sound familiar? Running experiments with elluminate changes that. Test real customer queries with your system and instantly see where it struggles: 100% format compliance (great!), but only 83% of question were answered correctly (problematic!).

Analyzing each failed case with insights into what is going wrong is just one click away. Inspecting the token usage exposes that you are burning money on verbose responses when concise ones would do. Reviewing the response time distribution reveals that 5% of queries are taking unacceptably long.

Gone are the "it seems to work fine" deployments. No more discovering issues from angry customer tickets. Before shipping, you already know your success rate on your specific use cases and edge cases. You measure instead of guessing. You prove instead of hoping. Every release is no longer a gamble, but a confident step forward.

Track Progress Across Versions

Every iteration brings you closer to production. Run an experiment with your initial prompt version, inspect the ratings and analyze the outputs. Figure out what needs to change to improve the next version. Refine, evaluate, repeat.

View the history of your prompt versions and know exactly which changes moved the needle and which did not. Roll back confidently if needed. Your path from prototype to production is mapped out clearly from start to finish.

Track Progress Across Versions

Every iteration brings you closer to production. Run an experiment with your initial prompt version, inspect the ratings and analyze the outputs. Figure out what needs to change to improve the next version. Refine, evaluate, repeat.

View the history of your prompt versions and know exactly which changes moved the needle and which did not. Roll back confidently if needed. Your path from prototype to production is mapped out clearly from start to finish.

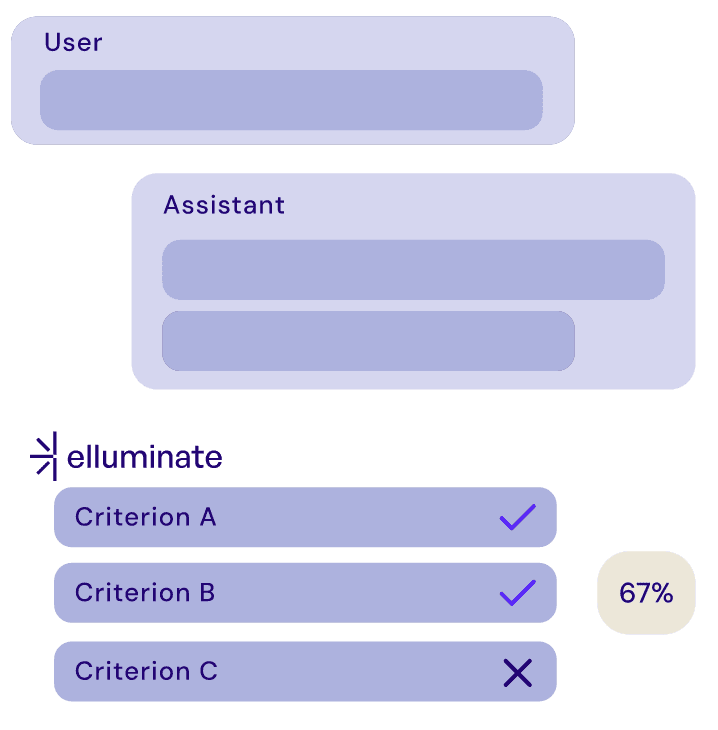

Trust Every Decision

Top-level metrics only tell half the story. To truly understand your AI's performance, dive deep into the individual responses. Inspect the exact prompt sent and the complete response generated. Review how each evaluation criterion was assessed, along with the rationale behind each judgment.

In this example, the chatbot correctly refused an off-topic weather question, exactly as instructed. The green checkmark indicates that the alignment criterion passed along with the detailed reasoning behind this assessment. You can additionally filter by failures to spot patterns and sort by token usage to optimize costs.

Every data point provides insight into your system's behavior. elluminate powers the steps required to build an AI you can trust: look at the data, understand the patterns and iterate according to the evidence.

Trust Every Decision

Top-level metrics only tell half the story. To truly understand your AI's performance, dive deep into the individual responses. Inspect the exact prompt sent and the complete response generated. Review how each evaluation criterion was assessed, along with the rationale behind each judgment.

In this example, the chatbot correctly refused an off-topic weather question, exactly as instructed. The green checkmark indicates that the alignment criterion passed along with the detailed reasoning behind this assessment. You can additionally filter by failures to spot patterns and sort by token usage to optimize costs.

Every data point provides insight into your system's behavior. elluminate powers the steps required to build an AI you can trust: look at the data, understand the patterns and iterate according to the evidence.

Here is what you can get done with elluminate

Move Fast, Keep Control.

Standardize, measure and ship with confidence.

Today

First Hour

After 30 Days

Manual QA delays launches

Surprise failures arise in production

Improvements are hard to quantify

Import existing test cases

Onboard your team and set baselines

Run your first eval and identify quick wins

Fewer production incidents

Faster iteration and experimentation

Full stakeholder confidence

Today

Manual QA delays launches

Surprise failures arise in production

Improvements are hard to quantify

First Hour

Import existing test cases

Onboard your team and set baselines

Run your first eval and identify quick wins

After 30 Days

Fewer production incidents

Faster iteration and experimentation

Full stakeholder confidence

Trusted by Leading AI Teams

See how teams across industries are building more reliable AI systems with elluminate

"In 8 years of AI development, we've learned that the difference between playing around and enterprise-ready production lies in rigorous evaluation. elluminate enables us to not only deliver innovative AI solutions to our clients, but to demonstrably prove their reliability. This builds trust and significantly accelerates deployment decisions."

"For a health insurance company, accuracy and security in AI applications are absolute prerequisites. With elluminate, we can meet these requirements seamlessly. Every iteration of our AI is automatically and thoroughly validated, ensuring that it responds not only competently but also reliably to critical queries. This gives us the necessary confidence to deploy our AI solutions boldly and successfully."

Frequently Asked Questions

Everything you need to know about AI evaluation and how elluminate can help your team

Have more questions? We'd love to help you get started.

Contact usPlatform Features

Everything you need tobuild reliable AI products.

Coming Soon

Several new features are currently in development:

See how elluminate can elevate your team today.

Schedule a call with one of our founders, and discover evaluation strategies for your use cases.

Schedule a demo with our founders